Static Analysis

Tell a PL researcher that you have a new programming language and one of the first things they want to know is whether you can do static analysis on it. Lucky for us, we can! But first, some background in this space:

Prior work on static analysis for surveys

I mentioned earlier that there is some prior work on using programming languages to address survey problems.

Static analysis in Topsl is constrained by the fact that Topsl permits users to define questions whose text depends on the answers to previous questions. Matthews is primarily concerned that each question is asked. While it can support randomization, this feature belongs to the containing language, Scheme, and so is not considered part of of the Topsl core. Although the 2004 paper mentions randomization as a feature that can be implemented, there is no formal treatment in any of the three Topsl-related publications.

If we also consider type-checking answer fields or enforce constraints on responses as static analysis, then most online survey tools, Blaise, QPL, and probably many more tools and services perform some kind of static analysis.

Static Analysis in SurveyMan

Surveys are interpreted by a finite state machine implemented in Javascript. To ensure that we don't cause undefined behavior, we must check that the csv is well-formed. After parsing, the SurveyMan runtime performs the following checks:

- Forward Branch Since we require the survey to be a DAG, all branching must be forward. We can do this easily by comparing branch destination ids with the ids of the branch question's containing block. This check takes time linear in the block's depth.

- Top Level Branch At the moment, we only allow branching to top level blocks. This check is constant.

- Consistent Branch Paradigms Having one "true" branch question per top level block is critically important for our survey language. Every block has a flag for its "branch paradigm." This flag indicates whether there is no branching in the block, one branch question, or whether the block should emulate sampling behavior. This check ensures that for every block, if it has a parent or siblings the following relations hold:

[table id=1 /]

We use the following classification algorithm to assign branch paradigms:

function getAllQuestionsForBlock

input : a block

output : a list of questions

begin

questions <- this block's questions

if this block's branch paradigm is SAMPLE

q <- randomly select one of questions

return [ q ]

else

qList <- shuffled questions

for each subblock b in this block

begin

bqList <- getAllQuestionsForBlock(b)

append bqList to qList

end

return qList

fi

end

function classifyBlockParadigm

input : a block

output : one of { NONE, ONE, SAMPLE }

begin

questions <- this block's questions

subblocks <- this block's subblocks

if every q in questions has a branch map

branchMap <- select one branch map

if every target block in branchMap is NULL

return SAMPLE

else

return ONE

else if one q in questions has a branch map

return ONE

else if there is no q in questions having a branch map

for each b in subblocks

begin

paradigm <- classifyBlockParadigm(b)

if paradigm is ONE

return ONE

end

return NONE

fi

end

The ONE branch paradigm gets propagated up the block hierarchy and pushes constraints down the block hierarchy. The SAMPLE branch paradigm pushes constraints down the block hierarchy, but has no impact on parent blocks. Finally, NONE is the weakest paradigm, as it imposes no constraints on its parents or children. All blocks are set to NONE by default and are overwritten by ONE when appropriate. - No Duplicates We check the features of each question to ensure that there are no duplicate questions. Duplicates can creep in if surveys are constructed programmatically.

- Compactness Checks whether we have any gaps in the order of blocks. Block ordering begins at 1. This check is linear in the total number of blocks. (This check should be deprecated, due to randomized blocks.)

- No branching to or from top level randomized blocks We can think of the survey as a container for blocks, and blocks as containers for questions and other blocks. The top-level blocks are those immediately contained by the survey. While we permit branching from randomizable blocks that are sub-blocks of some containing block, we do not allow branching to or from top-level randomized blocks. The check for whether we have branched from a top-level block is linear in the number of top-level blocks. The check for whether we try branching to a top-level randomizable block is linear in the number of questions.

- Branch map uniformity If a block has more than one branch question, then all of its questions must be branch questions. The options and their targets are expected to be aligned. In the notation we used in a previous post, for a survey csv $$S$$, if one question, $$q_1$$ in the block spans indices $$i,...,j$$ and another question $$q_2$$ spans indices $$k,...,l$$, then we expect $$S[BLOCK][i] = S[BLOCK][k] \wedge ... \wedge S[BLOCK][j] = S[BLOCK][l]$$.

- Exclusive Branching Branching is only permitted on questions where EXCLUSIVE is true.

The above are required for correctness of the input program. We also report back the following information, which can be used to estimate some of the dynamic behavior of the survey:

- Maximum Entropy

For a survey of $$n$$ questions each having $$m_i$$ responses, we can trivially obtain a gross upper bound on the entropy on the survey : $$n \log_2 (\text{max}(\lbrace m_i : 1 \leq i \leq n \rbrace))$$. - Path Lengths

-

Minimum Path Length Branching in surveys is typically related to some kind of division in the underlying population. In the previous post, we showed how branching could be used to run two versions of a survey at the same time. More often branching is used to restrict questions by relevance. It will be appropriate for some subpopulations to see certain questions, but not others.

When surveys have sufficient branching, it may be possible for some respondents to answer far fewer questions than the survey designer intended -- they may "short circuit" the survey. Sometimes this is by design; if we are running a survey but are only interested in curly-haired respondents, we have no way to screen the population over the web. We may design the survey so that answering "no" to "Do you have curly hair?" sends the respondent straight to the end. In other cases, this is not the intended effect and is either a typographical error or is a case of poor survey design.

We can compute minimum path length using a greedy algorithm:

function minPathLength

input : survey

output : minimum path length through the survey

begin

size <- 0

randomizableBlocks <- randomizable top level blocks

staticBlocks <- static top level blocks

branchDestination <- stores block that we will branch to

for block b in randomizableBlocks

begin

size <- size + length(getAllQuestionsForBlock(b))

end

for block b in staticBlocks

begin

paradigm <- b's branch paradigm

if branchDestination is initialized but b is not branchDestination

continue

fi

size <- size + length(getAllQuestionsForBlock(b))

if branchDestination is initialized and set to b

unset branchDestination

fi

if paradigm = ONE

branchMapDestinations <- b's branch question's branch map values

possibleBlockDestinations <- sort branchMapDestinations ascending

branchDestination <- last(possibleBlockDestinations)

fi

end

return size

end

-

Maximum Path Length Maximum path length can be used to estimate breakoff. This information may also be of interest to the survey designer -- surveys that are too long may require additional analysis for inattentive and lazy responses.

Maximum path length is computed using almost exactly the same algorithm as min path length, except we choose the first block in the list of branch targets, rather than the last.

We verified these algorithms empirically by simulating 1,000 random respondents and returning the minimum path.

-

Average Path Length Backends such as AMT require the requester to provide a time limit on surveys and a payment. While we currently cannot compute the optimal payment in SurveyMan, we can use the average path length through the survey to estimate the time it would take to complete, and from that compute the baseline payment.

The average path length is computed empirically. We have implemented a random respondent that chooses paths on the basis of positional preferences. One of the profiles implemented is a Uniform respondent; this profile simply selects one of the options with uniform probability over the total possible options. We run 5,000 iterations of this respondent to compute the average path length.

Note that average path length is the average over possible paths; the true average path length will depend upon the preferences of the underlying population.

-

Survey Language Essentials

As discussed in a previous post, web surveys are increasingly moving toward designs that more closely resemble experiments. A major goal in the SurveyMan work is to capture the underlying abstractions of surveys and experiments. These abstractions are then represented in the Survey language and runtime system.

Language

When the topic of a programming language for surveys came up, I initially proposed a SQL-like approach. Emery quite strongly suggested that SQL was, in general, a non-starter. He suggested a tabular language as an alternative approach that would capture the features I was so keen on, but in a more accessible format. To get started, he suggested I look at Query by Example.

The current language can be written as a csv in a spreadsheet program. This csv is the input to the SurveyMan runtime system, which checks for correctness of the survey and performs some lightweight static analysis. The runtime system then sends the survey to the chosen platform and processes results until the stopping condition is met.

The csv format has two mandatory columns and 9 optional semantically meaningful columns. Most surveys are only written with a subset of the available columns. The most up-to-date information can be found on the Surveyman wiki. The mandatory columns are QUESTION and OPTIONS. Column names can appear in any case and any columns that are not semantically meaningful to the SurveyMan runtime system will be pushed through from the input csv to the output csv.

Columns may appear in any order. Rows are partitioned into questions; all rows belonging to a particular question must be grouped together, but questions may be appear in any order. Questions use multiple rows to represent different answer options. For example, if a question asks what your preferred ice cream flavor is and the options are vanilla, chocolate, and strawberry, each of these will appear in their own row, with the exception of the first option, which will appear on the same row as the question. More formally, if we let the csv be represented by a dictionary indexed on column name and row numbers, then for Survey $$S$$ having column set $$c$$, some question $$Q_i$$ spanning rows $$\lbrace i, i+1, ..., j\rbrace, i < j $$, would be represented by a $$|c|\times (j - i + 1)$$ matrix: $$S[:][i:j+1]$$, and its answer options would be a vector represented by $$S[OPTIONS][i:j+1]$$.

Display and Quality Control Abstractions

The order of the successive rows in a question matters if the order of the options matter. In our ice cream example, the order does not matter, so we may enter the options in any order. If we were instead to ask "Rate how strongly you agree with the statement, 'I love chocolate ice cream.'", the options would be what's called a Likert scale. In this case, the order in which the options are entered is semantically meaningful. The ORDERED column functions as a flag for this interpretation. Its default value is false, so if the column is omitted entirely, every question's answer options will be interpreted as unordered.

We do not express display properties in the csv at all. We will discuss how questions are displayed in the runtime section of this post. We had considered adding a CLASS column to the csv to associate certain questions with individual display properties, but this would have the effect of not only introducing, but encouraging non-uniformity in the question display. This introduces additional factors that we would have to model in our quality control. Collaborators who are interested in running web experiments are the most keen on this feature; as we expand from surveys into experiments, we would need to understand what kinds of features they hope to implement with custom display classes, and determine what the underlying abstractions are.

That said, it happens that two columns we use for quality control also provides once piece of meaningful display information. EXCLUSIVE is a boolean-valued column indicating whether a respondent can only pick one of the answer options, or if they can choose multiple answer options. When EXCLUSIVE is true for a question, its answer options appear as a radio button inputs and for $$m$$ options, the total number of unique responses is $$m$$. When EXCLUSIVE is set to false, the answer options appear as radio buttons and the total number of unique responses is $$2^m - 1$$ (we don't allow users to skip over answering questions). We also support a FREETEXT column, which can be interpreted as a boolean-valued column or a regular expression. When FREETEXT ought to represent a regular expression $$r$$, it should be entered into the appropriate cell as $$\#\lbrace r \rbrace$$.

We also provide a CORRELATED column to assist in quality control. Our bug detection checks for correlations between questions to see if any redundancy can be removed. However, sometimes correlations are desired, whether to confirm a hypothesis or as a form of quality control. We allow sets of questions to be marked with an arbitrary symbol, indicating that we expect them to be correlated. As we'll discuss in later posts, this information can be critical in identifying human adversaries.

Control Flow

Prior work on survey languages has focused on how surveys, like programs, have control flow. We would be remiss in our language design if we failed to address this.

The most basic survey design is a flat survey. The default behavior in SurveyMan is to randomize the order of the questions. This means that for a survey of $$n$$ questions, there are $$n$$ factorial possible orderings (aside : WordPress math mode doesn't allow "!"?!?!?). It is not always desirable to display one of every possible ordering to a respondent. There are cases where we will want to group questions together. These groups are called "blocks."

Blocks are a critical basic unit for both surveys and experiments. Conventional wisdom in survey design dictates that topically similar questions be grouped together. We recently launched a survey on wage negotiation that has three topical units : demographic information, work history, and negotiation experience. Experiments on learning require a baseline set of questions followed by the treatment questions and follow-up questions. Question can be grouped together using the BLOCK column.

Blocks are represented by the regular expression _?[1-9][0-9]*(\._?[1-9][0-9]*)* . Numbering begins at 1. Like an outline, the period indicates hierarchy: blocks numbered 1.1 and 1.2 are contained in block 1. We can think of block numbers as arrays of integer. We define the following concepts:

function depth

input : A survey block

output : integer indicating depth

begin

idArray <- block id as an array

return length(idArray)

end

function questionsForBlock

input : A surveyBlock

output : A set of questions contained in this particular block

begin

topLevelQuestions <- a list of questions contained directly in this block

subblocks <- all of the blocks contained in this block

blocks <- return value initialized with topLevelQuestions

for block in subblocks

do

blocks <- blocks + questionsForBlock(block)

done

return blocks

end

We can say that blocks have the following two properties:

- Ordering : Let the id for a block $$b$$ of depth $$d$$ be represented by an array of length $$d$$, which we shall call $$id$$. Let $$index_d$$ be a function of a question representing its dynamic index in the survey (that is, the index at which the question appears to the user). Then the block ordering property states that

$$\forall b_1 \forall b_2 \bigl( d_1 = d_2 \; \wedge \; id_{b_1}[\mathbf{\small depth}(b_1)-1] < id_{b_2}[\mathbf{\small depth}(b_2)-1] \; \longrightarrow \quad \forall q_1 \in \mathbf{\small questionsForBlock}(b_1)\;\forall q_2 \in \mathbf{\small questionsForBlock}(b_2) \; \bigl( index_d(q_1) < index_d(q_2) \bigr) \bigr)$$

This imposes a partial ordering on the questions; top level questions in a block may appear in any order, but for two blocks at a particular depth, all of the questions in the lower numbered block must be displayed before any of the questions in the block at the higher number.

- Containment : If there exists a block $$b$$ of depth $$d > 1$$, then for all $$i$$ from 0 to $$d$$, there must also exist blocks with ids $$[id_b[0]],..,[id_b[0],.., id[d-1]]$$. Each of these blocks is said to contain $$b$$. If $$b_1$$ is a containing block for $$b_2$$, then for all top level questions $$q_1$$ in $$b_1$$, it is never the case that

$$\exists q_i, q_j, q_k \bigl(\lbrace q_i, q_j \rbrace \subset \mathbf{\small questionsForBlock}(b_2) \; \wedge \; q_k \in \mathbf{\small questionsForBlock}(b_1) \; \wedge \; index_d(q_i) < index_d(q_k) < index_d(q_j)$$

That is, none of the top level questions may be interleaved with a subblock's question. Note that we do not require the survey design to enumerate containing blocks if they do not hold top-level questions.

Sometimes what a survey designer really wants is a grouping, rather than an ordering. Consider the two motivating examples. It's clear for the psychology experiment that an ordering is necessary. However, the wage survey does not necessarily need to be ordered; it just needs to keep the questions together. If a survey designer would like to relax the constraints of an ordering, they can prefix the block's id with an underscore at the appropriate level. For a block numbered 1.2.3, if we change this to _1.2.3, then all other members of block 1 will need to have their identifiers modified to _1. If we were instead to change 1.2.3 to 1.2._3, then this block would be able to move around inside block 1.2 under the same randomization scheme as block 1.2's top level questions. Note that you cannot have a randomized block _n and an unrandomized block n.

Our last column to consider is the BRANCH column. Branching is when different choices for a particular question's answers lead to different paths through the survey. The main guarantee we want to preserve with branching is that the set of nodes in a respondent's path through the survey depends solely on their responses to the questions, not the randomization.

The contents of the BRANCH column are block ids associated with the branch destination. There can be exactly one branch question per top level block. This ensures the path property mentioned above. The BRANCH column also doubles as a sampling column. We permit one special branch case, where a block contains no subblocks and all of its top level questions are branch questions. In this case, the block is treated as a single question, whose value is selected randomly. If the resulting distributions of answers for the questions in this block are not determined to be drawn from the same underlying distribution, the system flags the block. Since the assumption is that all of the questions are semantically equivalent, the contents of the BRANCH column must be identical for each question. If the user does not want to branch to a particular location, but instead wants the user to see the next question (as with a free, top-level question), then BRANCH can be set to the keyword NULL.

We currently only allow branching to a top-level block, but are considering weakening this requirement.

Deprecated and Forthcoming Columns

The psycholinguistic surveys we've been running often require external files to be played. Non-text media are also often used in other web experiments. We had previously supported a RESOURCE column that would take a URL and display this under the question. Since the question column supports arbitrary HTML, it seemed redundant to include the RESOURCE column. These stimuli are part of the question, so separating them out didn't make much sense. While the current code still supports this column, it will not in the future.

Another column that we are considering deprecating is the RANDOMIZE column. We see two issues with this column : first of all, there has been some confusion about whether it referred to randomizing the question's answer options or if it referred to allowing the question's order to be randomized. It referred to the former, since question order randomization is handled by the BLOCK column. Secondly, we do not see any use-case for not randomizing the question options. It would exist to satisfy a client who has no interest in our quality control techniques.

Presley has suggested adding a CONDITION column, for use in web experiments. This addition is tempting; I would first need to consider whether the information it provides is already captured by the CORRELATED column (or whether we should simply rename the CORRELATED column CONDITION and add some additional behavior to the execution environment).

Runtime System

Okay, this blog post is too long already...runtime post forthcoming!

The pricing problem in SurveyMan

This is going to be a short post that I'll expand on more, post-portfolio...

One of the nice features of AutoMan is that it manages the pricing of a task for you. The user only needs to specify a maximum amount they're willing to pay, and AutoMan will return the result at the optimal price. It first computes the number of agreeing responses needed to have high confidence that the result is correct. Then it starts at an initial baseline assignment duration (i.e. the time expected to complete the task). The initial duration may be provided by the user; the default setting is 30 seconds. AutoMan uses this time to compute the wage, which is tied to the US federal minimum wage. The assignment is posted on Mechanical Turk for some lifetime set to one hundred times the task duration. If no results come back during that lifetime, it doubles the task time and reposts the job.

Of course, there's one caveat : since AutoMan relies on there being a single answer, if the population is in disagreement, the budget will be used up and no results will be returned.

One of the original motivations for SurveyMan was to address the idea of returning distributions of results, rather than point estimates of results. We were also interested in computing end-to-end confidence intervals for chained AutoMan computations. We realized that the underlying structure of chained distributions of functions exactly modeled surveys. Thus, SurveyMan.

SurveyMan today is quite far from this original motivation. Since we began collaborating with social scientists, we veered into experimental design and discovery, rather than static analysis or speculative execution of programs having functions returning probabilistic results.

Determining the optimal price for a SurveyMan task is a feature from AutoMan that I'd like to see in SurveyMan. AutoMan's automated pricing scheme was possible because the system could calculate the number of responses needed to determine if the answer had been found. This is significantly more challenging for SurveyMan. If we treat a survey as a the joined probability distribution of each of its questions, then determining the sample size of "good" respondents boils down to power analysis. However, the techniques here are somewhat different; power analysis was designed for either the case where the probability of certain conditions could be computed directly, or in post hoc data analysis, once the data had already been collected. A first-pass consideration of what we're really looking for here is an online algorithm to determine the convergence of distribution of the joint probabilities of the questions. I don't think this is a bad start, but I worry about a decision procedure that relies on such complex data. For example, if we consider a survey that's flat, this means we treat each question as exchangeable. This does not however mean that the questions are independent. Suppose for a moment that they were; then we could say something like, the survey is a random variable defined to be the sum of the random variables representing the questions:

$$ S = Q_{1} + Q_{2} + ... + Q_{n} $$

This survey has $$n$$ questions and each of the random variables $$Q_i, 1 \leq i \leq n$$ corresponds to the distribution of the answer texts -- that is, it has no notion of its own position in the survey.

We could then decide that our stopping condition is the case where our expectation converges. Since expectation is linear, we can look at the convergence of each question's distribution and make our decision then.

Okay, so if the questions were actually independent, I don't see being such a bad approach. I guess if we assume that the underlying population can be represented as the sum of some unknown number of independent normal distributions, we can say that the mean is a sufficient statistic and call it a day.

Of course, we have little reason to believe that the questions are independent. While randomizing the order of the questions simplifies our identification of bugs, it's quite different when we consider the convergence of distribution. We would need to consider each instance of the survey as a Bayesian network, where the previous questions are parents of the following questions. We already perform pairwise correlation tests; we could use some of this information to determine independence. If we could simplify the model sufficiently, we might be able to converge on an optimal number of samples. We could use the independence assumption to calculate a lower bound on the number of responses needed.

Anyway, this was meant to be a short post -- the idea is to present some of the difficulties of automatically determining pricing in SurveyMan. The point is that the pricing mechanism itself depends on knowing how many "good" responses we need and answering that is hard. Even if we could answer that question (and we should -- it's certainly been on the minds of our colleagues in linguistics), we would then need to consider the effects of allowing breakoff, which further complicates things.

[Read More]Adversaries

Bad actors are a key threat to validity that cannot be controlled directly through better survey design. That is, unlike the case of bias in wording or order, we cannot eliminate bugs through the survey design. What we can do is use the design to make it easier to identify these adversaries.

Bots

Bots are computer programs that fill out surveys automatically. We assume that bots have a policy for choosing answers that is either completely independent of the question, or is based upon some positional preference.

No positional preference A bot that chooses responses randomly is an example of one that answers questions independently from their content.

Positional preference A bot that always chooses the first question or always chooses the last question, or alternates positions on the basis of the number of available choices: for example, "Christmas tree-ing" a multiple choice survey.

Lazy Respondents

We define a lazy respondent as a human who is behaving in a bot-like way. In the literature these individuals are called spammers and according to a study from 2010, almost 40% of the population sampled failed a screening task that only required basic reading comprehension. There are two key differences between human adversaries and software adversaries : (1) we hypothesize that individual human adversaries are less likely to choose responses randomly and (2) that when human adversaries have a positional preference, they are more likely make small variations in their otherwise consistent responses. Regarding (1), while there is no end to the number of studies and amount of press devoted to humans' inability to identify randomness, there has been some debate over whether humans can actually generate sequences of random numbers. Regarding (2), while a bot can be programmed to make small variations in positional preference, we believe that humans will make much more strategic deviations in their positional preferences.

Both humans and bots may have policies that depend on the surface text of a question and/or its answer options. An example of a policy that chooses answers on the basis of surface text might be one that prefers the lexicographically first option, or one that always chooses surface strings equal to a value (e.g. contains "agree"). These adversaries are significantly stronger than the ones mentioned above.

It's possible that some could see directly modeling a set of adversaries as overkill; after all, services such as AMT rely on reputable respondents for their systems to attract users (or not?). While AMT has provided means for requesters to filter the population, this system can easily be gamed. This tutorial from 2010 describes best practices for maximizing the quality of AMT jobs. Unfortunately, injecting "attention check" or gold standard questions is insufficient to ward off bad actors. Surveys are a prime target for bad actors because the basic assumption is that the person posting the survey doesn't know what the underlying distribution of answers ought to look like -- otherwise, why would they post a survey? Sara Kingsley recently pointed us to an article from All Things Considered. Emery found the following comment:

I've been doing Mechanical Turk jobs for about 4 months now.

I think the quality of the survey responses are correlated to the amount of money that the requester is paying. If the requester is paying very little, I will go as fast as I can through the survey making sure to pass their attention checks, so that I'm compensated fairly.

Conversely, if the requester wants to pay a fair wage, I will take my time and give a more thought out and non random response.

A key problem that the above quote illustrates is that modeling individual users is fruitless. MACE is a seemingly promising tool that uses post hoc generative models of annotator behavior to "learn whom to trust and when." This work notably does not cite prior work by Panos Ipeirotis on modeling users with EM and considered variability in workers' annotations.

The problem with directly modeling individual users is that it cannot account for the myriad latent variables that lead a worker to behave badly. In order to do so, we would need to explicitly model every individual's utility function. This function would incorporate not only the expected payment for the task, but also the workers' subjective assessment of the ease of the task, the aesthetics of the task, or their judgement of the worthiness of the task. Not all workers behave consistently across tasks of the same type (e.g. annotations), let alone across tasks of differing types. Are workers who accept HITs that cause them dissatisfaction more likely to return the HIT, or to complete the minimum amount of work required to convince the requester to accept their work?

[Read More]On Keeping the Survey a DAG

A topic that came up during my SurveyMan lab talk in October was our lack of support for looping questions. Yuriy had raised the objection that there will be cases where we will want to repeat a question, such as providing information on employment. We argued that, since we were emulating paper surveys (at the time), the user could provide an upper bound on the number of entries and ask the user whether they wanted to add another entry for a category. A concern I had was that, since we're interested in role of survey length in the quality of responses, and since we allow breakoff, when we have a loop in a question, it becomes much more difficult to tell whether the question is a problem or if the length of the survey is a problem. Where previously we treated each question as a random variable, we would now need to model a repeating question as an unknown sum of random variables.

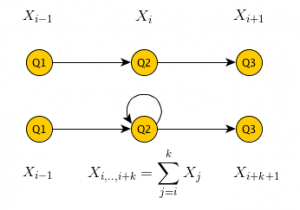

The probability model of a survey with a loop differs from the model of a survey without one. Note that while both random variables corresponding to the responses to question Q2 may be modeled by the same distribution, they will have different parameters.

The probability model of a survey with a loop differs from the model of a survey without one. Note that while both random variables corresponding to the responses to question Q2 may be modeled by the same distribution, they will have different parameters.

This issue came up again during the OBT talk. The expanded version of Topsl that appeared in the PLT Redex book described a semantics for a survey that was allowed to have these kinds of repeated questions.

We do not think it is appropriate to model such questions as loops. Loops are fundamentally necessary to express computable functions. Since the kinds of questions these loops are modeling are more accurately described as having finite, unknown length, we do not want to encode the ability to loop forever.

Aside from this semantic difference, we see another problem with the potentially perpetual loop. Consider the use-case for such a question: in the case of the lab talk, it was Yuriy's suggestion that we allow people to enter an employment history of unknown length. In the case of Topsl, it was self-reporting relationship history. If a respondent's employment or relationship history is very long, they may be tempted to under-report the number of instances. This might be curtailed if the respondent is required to first answer* a question that asks for the number of jobs or relationships they** have had. Then responses in the loop could be correlated with the previous question, or the length of the loop could be bounded. In our setting, where we do not respondents to skip questions, the former would need to be implemented if we were to allow loops at all.

Alternatively, instead of presenting each response to what is semantically the same question as if it were a separate question, we could first ask the question for the number of jobs or relationships, and then ask a followup question on a page that takes the response to the previous question, and displays that number of text boxes on the page. We would still bound the total number of responses, but instead of presenting each question separately, we would present them as a single question.

In the analysis of a survey we ran, we found statistically significant breakoff at the freetext question. We'd like to test whether freetext questions in general are correlated with high breakoff. If this is the case, we believe it provides further evidence that the approach to "loop questions" is better implemented using our approach.

* I just wanted to note that I love splitting infinitives.

** While I'm at it, I also support gender-neutral pronouns. Political grammar FTW!