On calculating survey entropy

I've been spending the past two weeks converting analyses that were implemented in Python and Julia into Clojure. The OOPSLA Artifact Evaluation deadline is June 1 and moving these into Clojure means that the whole shebang runs on the JVM (and just one jar!).

One of the changes I really wanted to make to the artifact we submit was a lower upper bound on survey entropy. Upper bounds on entropy can be useful in a variety of ways: in these initial runs we did for the paper, I found them useful for comparing across different surveys. The intuition is that surveys with similar max entropies have similar complexity, similar runtimes, similar costs, and similar tolerance to bad behavior. Furthermore, if the end-user were to use the simulator in a design/debug/test loop, they could use max entropy to guide their survey design.

We've iterated our calculation of the max entropy. Each improvement has lowered the upper bound for some class of surveys.

Max option cardinality Our first method for calculating maximum entropy of a survey was the one featured in the paper: we find the question with the largest number of options and say that the entropy of the survey must be less than the entropy of a survey having equal number of questions, where every question has this maximum number of answer options. Each option has equal probability of being chosen. For some $$survey$$ having $$n$$ questions, the maximum entropy would then be $$\lceil n \log_2 (\max ( \lbrace \lvert \lbrace o : o \in options(q) \rbrace \rvert : q \in questions(survey) \rbrace ) ) \rceil$$.

The above gives a fairly tight bound on surveys such as the phonology survey. For surveys that have more variance in the number of options proffered to the respondent, it would be better to have a tighter bound.

Total survey question max entropy We've had a calculation for total survey question max entropy implemented in Clojure for a few weeks now. For any question having at least one answer option, we calculate the entropy of that question, and sum up all those bits. For some $$survey$$ having $$n$$ questions, where each question $$q_i$$ has $$m_i$$ options, the maximum entropy would then be $$\lceil \sum_{i=1}^n \mathbf{1}_{\mathbb{N}^+}(m_i)\log_2(m_i)\rceil$$

While the total survey question max entropy gives a tighter bound on surveys with higher variance, it is still a bit too high for surveys with branching. Consider the wage survey. In Sara's initial formulation of the survey (i.e. not the one we ran), the question with the greatest number of answer options was one asking for the respondent's date of birth. The responses were dates ranging from 1900 to 1996. Most of the remaining questions have about 4 options each:

[table id=4 /]

Clearly in this case, using max option cardinality would not give much information about the entropy of the survey. The max cardinality maximum entropy calculation gives 258 bits, whereas the total survey question max entropy gives 80 bits.

This lower upper bound still has shortcomings, though -- it doesn't consider surveys with branching. For many surveys, branching is used to ask one additional question, to help refine answers. For these surveys, many respondents answer every question in the survey. However, there are some survey that are designed so that no respondent answers every question in the survey. Branching may be used to re-route respondents along a particular path. We used branching in this way when we actually deployed Sara's wage survey. The translated version of Sara's survey has two 39-question paths, with a 2-option branch question to start the survey and zero option instructional question to end the survey. This version of the survey has a max cardinality maximum entropy calculation of $$80 * \log_2 97 = 528$$ and a total survey question max entropy of 160 bits (without the ceiling operator, this is approximately equal two two times the entropy of the previous version, plus one bit for the introductory branch question).

The maximum number of bits needed to represent this survey approximately doubled from one version to the next. This isn't quite right -- we know that the maximum path through the survey is 41 questions, not 80. In this case, branching makes a significant difference in the lower bound.

Max path maximum entropy Let's instead compute the maximum entropy over distinct paths through the survey. We've previously discussed the computational complexity of computing distinct paths through surveys. In short, randomization significantly increases the number of possible paths through the survey; if we focus on path through blocks instead, we have more tractable results. Rather than thinking about paths through the survey as distinct lists of questions, where equivalent paths have equivalent lengths and orderings, we can instead think about them as unique sets of questions. This perspective aligns nicely with the invariants we preserve.

Our new maximum entropy calculation will compute the entropy over unique sets of questions and select the maximum entropy computed over this set. Some questions to consider are:

- Are joined paths the same path?

- If we are computing empirical entropy, should we also consider breakoff? That is, do we need the probability of answering a particular question?

We consider paths that join distinct from each other; the probability of answering that question will sum up to one, if we don't consider breakoff. As for breakoff, for now let's ignore it. If we need to compute the empirical entropy over the survey (as opposed to the maximum entropy), then we will use the subset relation to determine which questions belong to which path. That is, if we have a survey with paths $$q_1 \rightarrow q_2 \rightarrow q_4$$ and $$q_1 \rightarrow q_3 \rightarrow q_4$$, then a survey response with only $$q_1$$ answered will be used to compute the path frequencies and answer option frequencies for both paths. The maximum entropy is then computed as $$\lceil max(\lbrace -\sum_{q\in survey} \sum_{o \in ans(q)} \mathbb{P}(o \cap p) \log_2 \mathbb{P}(o \cap p) : p \in paths \rbrace) \rceil$$.

There are two pieces of information we need to calculate before actually computing the maximum entropy path. First, we need the set of paths. Since paths are unique over blocks, we can define a function to return the set of blocks over the paths. The key insight here is that for blocks that have the NONE or ONE branch paradigm, every question in that block is answered. For the branch ALL paradigm, every question is supposed to be "the same," so they will all have the same number of answer options. Furthermore, since the ordering of floating (randomizable) top level blocks doesn't matter, and since we prohibit branching from or to these blocks, we can compute the DAG on the totally ordered blocks and then just concatenate the floating blocks onto the unique paths through those ordered blocks.

The second thing we need to compute is $$\mathbb{P}(o \cap p)$$. The easiest way to do this is to take a survey response and determine which unique path(s) it belongs to. If we count the number of times we see option $$o$$ on path $$p$$, the probability we're estimating is $$\mathbb{P}(o | p)$$. We can compute $$\mathbb{P}(o \cap p)$$ from $$\mathbb{P}(o | p)$$ by noting that $$\mathbb{P}(o \cap p) = \mathbb{P}(o | p)\mathbb{P}(p)$$. This quantity is computed by $$\frac{\# \text{ of } o \text{ on path } p}{\#\text{ of responses on path } p}\times\frac{\#\text{ of responses on path } p}{\text{total responses}}$$, which we can reduce to $$\frac{\# \text{ of } o \text{ on path } p}{\text{total responses}}$$. It should be clear from this derivation that even if two paths join, the entropy for the joined sub path is equal to the case where we treat paths separately.

The maximum entropy for the max path in the wage survey, computed using the current implementation of SurveyMan's static analyses, is 81 bits -- equivalent to the original version of the survey, plus one extra bit for the branching.

[Read More]Hack the system

The Java/Clojure QC of SurveyMan has a RandomRespondent built in. This RandomRespondent class generates answers to the surveys on the basis of some policy. The policies currently available are uniform random, first option, last option, and Gaussian. I've been thinking about some other adversary models I could add to the mix :

- Christmas Tree : This is a variant of the uniform random respondent, where the respondent zigzags down the survey in the form of a "Christmas Tree."

- Memory Bot : This is more like an augmentation to one of the existing policies, where the questions and answers are cached, and for each question, the bot checks whether it has answered something like it before. We know that sometimes researchers repeat questions, or have similarly worded questions with the same answers (e.g. year of birth). The goal of this bot would be to identify similarly worded questions and try to give consistent answers.

- IR Bot : Alternately, we could search through Google for answers and use those answers as solutions.

It's fairly trivial to write some Javascript to answer our kind of surveys. Since we now have automated browser testing set up, we should also be able to test collusion in the context of the full pipeline. W00t!

[Read More]Pricing, for real this time

Reader be warned : I began this draft several weeks ago, so there might be a lack of coherence...

A few weeks ago I posted some musings on pricing. In that post I was mainly concerned with the modeling problem for pricing. Here I'd like to discuss some research questions I've been bandying about with Sara Kingsley, and outline the experiments we'd like to run.

The Problem

Pricing is intricately tied together with quality control; we use pricing algorithms to help ensure quality control. When we previously outlined our adversaries, we took a traditional approach with the assumption that an actor is either good or bad. There are several key features that this model ignores:

- Some workers are better at some types of tasks than other types of tasks.

- The quality of the task has an impact on the quality of the worker's output.

- Some design decisions in services such as AMT sometimes make it difficult to tease out the difference between the above two.

(1) is well known and solved by conditioning the classification on the task. Plenty of work on assessing the quality of AMT workers incorporates task difficulty or task type. Task type discretizes the space, making classification clean and easy. Task difficulty is more difficult to model, since it can be highly subjective. I'm also not sure it's entirely discrete and have not come across a compelling paper on the subject (though I haven't looked thoroughly, so please post any in the comments!).

(2) seems to be better-known among social scientists than computer scientists. AMT workers have online forums where they post information about HITs. Typically if a HIT is good, they post very little additional information. If a HIT is bad, they will review it.

Poorly designed or unclear HITs incur a high cost for the workers and the requesters. Literature on crowdworkers' behavior suggests that they are aiming for a particular rate. On AMT, a worker can return a HIT at any time. However, if a worker returns a HIT, they will not be compensated for any work whatsoever, and no information about abandonment is returned to the requester. Consequently, as a worker makes their way through a HIT, they must weight the cost of completion against the cost of abandonment. Even workers who are highly skilled at a particular task may perform poorly if a HIT is poorly designed. If workers do not reach out to requesters or if requesters do not search for their own HITs on forums, they may never know that workers are abandoning the work, or if they do, why.

Quality of the work is clearly tied to quality of the task. In SurveyMan, we address quality of the task in a more principled way than just best practices. It would also stand to reason that quality of the work would be tied to price. One might hypothesize that a higher price for a task would translate to higher quality work. However (according to what Sara's told me), this is not the case. Work quality allegedly does not appear to respond to price. We believe that this result is a direct consequence from the AMT shortcoming detailed above -- prohibiting workers from submitting early enforces a discontinuity in the observed quality/utility function.

How to address pricing

There are two main research questions we would like to address:

- Does the design of SurveyMan change worker behavior?

- Can we find a general function for determining the price/behavior tradeoff, and implement this as part of the SurveyMan runtime system?

The impetus for these particular questions was the results we found when running Sara's wage survey. There were two differences in the deployment of this survey: (1) I did not run this survey with a breakoff notice and (2) this was the first survey launched over a weekend.

So-called "time of day effects" are a known problem with AMT. Since AMT draws primarily on workers from the US and India, there are spikes in participation during times when these workers are awake and engaged. Many workers perform HITs while employed at another job. It wouldn't be a stretch to claim that sub-populations have activity levels that can be expressed as a function of the day of the week. This could explain some of the behavior we observed with Sara's wage survey. However, the survey ran for almost a week before expiring. I believe that (1) had a strong influence on workers' behavior.

Is SurveyMan the Solution?

Sara had mentioned some work in economics that found that changing the price paid for a HIT on AMT had no impact on the quality of the work. I had read some previous work that discussed the impact of price on attracting workers, but discussed quality control as a function of task design, rather than pricing. I suspect that the observed absence of difference between price points is related to the way the AMT system is designed.

AMT does not pay for partial work. When a worker accepts a HIT, they can either complete the HIT and submit it for payment (which is not guaranteed), or they return the HIT and receive no payment. Since requester review sites exist, the worker can use the requester's reputation as a proxy for the likelihood that they'll be paid for their work and as a proxy for the quality of the HIT.

Consider the case where the HIT is designed so that the worker has complete information about the difficulty of the task. In the context of SurveyMan, this would be a survey whose contents are displayed all on the same page. We know that there will be surveys where this approach is simply not feasible - one example that comes to mind is experimental surveys that require measuring the difference in a respondent's responses over two different stimuli. In any case, if the user is able to see the entire survey, they will be able to gauge the amount of effort required to complete the task, and make an informed decision about whether or not to continue with the HIT.

This design has several drawbacks. There's the aforementioned restriction over the types of surveys we can analyze. There's also a problem with our ability to measure breakoff. Since we display one question at a time, in a more or less randomized order, we can tell the difference between questions that cause breakoff and length-related breakoff. When the respondent is allowed to skip around and answer questions in any order, we lose this power. We also lose any inferences we might make about question order, and generally have a more muddied analysis.

Displaying questions one at a time was always part of our design. However, we decided to allow users to submit early as a way of handling this issue with AMT and partial work. Since we couldn't get any information about returned HITs, we decided to discourage users from returning them and instead allow them to submit their work early. Since we figured that we would need to provide users with an incentive to continue answering questions, we displayed a notice at the beginning of a survey that told the user that they would be paid a bonus commensurate with the amount and quality of the work they submitted. We decided against telling the user (a) how the bonus would be calculated and (b) how long the survey would be.

I initially thought we would court bots by allowing users to submit after answering the first question. This was absolutely not the case for the phonology surveys. Anecdotally it seems that AMT has been cracking down on bots, but I had a really hard time believing that we had no bots. It wasn't until I posed the wage survey that I began to see this behavior. I believe that it is related to the lack of breakoff notice.

It would be interesting to test some of these hypothesis on a different crowdsourcing platform, especially one that allows tracking for partial work. Even a system that has a different payment system set up would be a good point of comparison.

Possible Experiments

We set up the wage survey to run a fully randomized version and a control version at the same time. I really liked this setup, since it meant that any given respondent had a 50/% chance of seeing one of the two, effectively giving us randomized assignments.

Experiment 1 To start with, I would like to run another version that randomly displays the breakoff notice on each version. One potentially confounding problem might be the payment of bonuses, since this has been our practice in the past, and may be known to the workers. The purpose of this experiment is to test whether showing the breakoff notice changes the quality of responses.

Experiment 2 Another parameter that needs more investigation is the base pay. We recently started using federal minimum wage, an estimated time per question, and the max or average path through the survey (whether to use max or average is still up for debate). I've seen very low base pay, with the promise of bonuses, successfully attract workers. It isn't clear to me how the base pay is related to the number of responses or quality of worker.

[Read More]Bugs and their Analyses

[table id=3 /]

Correlation

Recall that correlation is typically measured as the degree to which two variables covary. We are generally interested in correlation as a measure of predictive power. Correlation coefficients give the magnitude of a monotone relationship between two variables. Completely random responses for two questions will result in a low correlation coefficient.

We use two measures of correlation. For ordered questions, we use Spearman's $$\rho$$; this coefficient ranks responses and measures to degree to which the ranked results have a monotone relationship. For unordered questions, we use Cramer's $$V$$. The procedure for Cramer's $$V$$ is based on the $$\chi^2$$ statistic; we take one question and compute the empirical probability for each answer. We then use this estimator to compute the expected values for each of the values in the other question and find the normalized differences between observed and expected values. The sum of these values is the $$\chi^2$$ statistic, which has a known distribution. Cramer's $$V$$ scales the value of the $$\chi^2$$ statistic by the sample size and the minimum degrees of freedom.

Both of these tests are sensitive to small counts for some categories. We generally do not collect sufficient information to produce meaningful confidence intervals; as a result, we simply flag correlations that may be of interest.

Order Bias

We use the $$\chi^2$$ statistic directly to compute order bias for unordered questions. For any question pair $$q_i, q_j, i\neq j$$, we partition the sample into two sets : $$S_{i<j}$$, the set of questions where $$q_i$$ precedes $$q_j$$ and $$S_{j<i}$$, the set of questions where $$q_i$$ follows $$q_j$$. We assume each set is independent.* We show wolog how to test for bias in $$q_i$$ when $$q_j$$ precedes it:

- Compute frequencies $$f_{i<j}$$ for the answer options of $$q_i$$ in the set of responses $$S_{i<j}$$. We will use these values to compute the estimator.

- Compute frequencies $$f_{j<i}$$ for answer options $$q_i$$ in the set of responses $$S_{j<i}$$. These will be our observations.

- Compute the $$\chi^2$$ statistic on the data set. The degrees of freedom will be one less than the number of answer options, squared. If the probability of computing such a number is less than the value at the $$\chi^2$$ distribution with these parameters, there is a significant difference in the ordering

We compute these values for every unique question pair.

Wording

Wording bias classified in the same was as order bias, except in this case, instead of comparing two sets of responses, we compare $$k$$ sets of responses, corresponding to the number of variants we are interested in.

Breakoff

We address two kinds of breakoff : ones determined by position, and ones determined by question. For both analyses, we use the nonparametric bootstrap procedure to determine a one-sided 95% confidence intervals and flag indices and questions whose counts exceed the threshhold.

Breakoff by position is often an indicator that the survey is too long. Breakoff by question may indicate that a question is unclear, offensive, or burdensome to the respondent. There are also some cases where breakoff may indicate a kind of order bias.

Adversaries

We've tried a variety of methods for detecting adversaries. The bests empirical results we've seen so far have been for a method that uses entropy.

We first compute the empirical probabilities for each question's answer options. Then for every response $$r$$, we calculate a score styled after entropy : $$score_{r} = \sum_{i=1}^n p(o_{r,q_i}) * \log_2(p(o_{r,q_i}))$$. We then use the bootstrap method to find a one-sided 95% confidence interval and flag any responses that are above the threshold.

*Independence is based on the assumption that each worker is truly unique and that workers do not collude.

[Read More]Experiment Report I : Breakoff, Bot Detection, and Correlation Analysis for Flat, Likert-scale Surveys

We ran a survey previously conducted by Brian Smith four times, to test our techniques against a gold-standard data set.

| Date launched | Time of Day launched (EST) | Total Responses |

Unique Respondents |

Breakoff? |

| Tue Sep 17 2013 | Morning 9:53:35 AM EST | 43 | 38 | No |

| Fri Nov 15 2013 | Night | 90 | 67 | Yes |

| Fri Jan 10 2013 | Morning | 148 | 129 | Yes |

| Thu Mar 13 2014 | Night 11:49:18 PM EST | 157 | 157 | Yes |

This survey consists of three blocks. The first block asks demographic questions : age and whether the respondent is a native speaker of English. The second block contains 96 Likert-scale questions. The final block consists of one freetext question, asking the respondent to provide any feedback they might have about the survey.

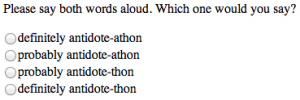

Each of the 96 questions in the second block asks the respondent to read aloud an English word suffixed with either of the pairs "-thon/-athon" or "licious/-alicious" and judge which sounds more like an English word.

First Run

The first time we ran the survey was early in SurveyMan's development. We had not yet devised a way to measure breakoff and had no quality control mechanisms. Question and option position were not returned We sent the data we collected to Joe Pater and Brian Smith. Brian reported back :

The results don't look amazing, but this is also not much data compared to the last experiment. This one contains six items for each group, and only 26 speakers (cf. to 107 speakers and 10 items for each group in the Language paper).

Also, these distributions are *much* closer to 50-50 than the older results were. The fact that -athon is only getting 60% in the final-stress context is kind of shocking, given that it gets 90% schwa in my last experiment and the corpus data. Some good news though -- the finding that schwa is more likely in -(a)thon than -(a)licious is repeated in the MTurk data.

Recall that the predictions of the Language-wide Constraints Hypothesis are that:

1. final stress (Final) should be greater than non-final stress (noFinal), for all contexts. This prediction looks like it obtains here.

2. noRR should be greater than RR for -(a)licious, but not -(a)thon. Less great here. We find an effect for -thon, and a weaker effect for -licious.licious (proportion schwaful)

noRR RR

Final 0.5276074 0.5182927

noFinal 0.4887218 0.5031056thon (proportion schwaful)

noRR RR

Final 0.5950920 0.5670732

noFinal 0.5522388 0.4938272

Our colleagues felt that this made a strong case for automating some quality control.

Second Run

The second time we ran this experiment, we permitted breakoff and used a Mechanical Turk qualification to screen respondents. We required that respondents have completed at least one HIT and have an approval rate of at least 80% (this is actually quite a low approval rate by AMT standards). We asked respondents to refrain from accepting this HIT twice, but did not reject their work if they did so. Although we could have used qualifications to screen respondents on the basis of country, we instead permitted non-native speakers to answer, and then screened them from our analysis. In future versions, we would recommend making the native speaker question a branch question instead.

We performed three types of analyses on this second run of the survey : we filtered suspected bots, we flagged breakoff questions and positions, and we did correlation analysis.

Of the 67 unique respondents in this second run of the phonology survey, we had 46 self-reported native English speakers. We flagged 3 respondents as bad actors. Since we do not have full randomization for Likert scale questions, we use positional preference to flag potential adversaries. Since symmetric positions are equally likely to hold one extreme or another, we say that we expect the number of responses in either of the symmetric positions to be equal. If there is a disproportionate number of responses in a particular position, we consider this bad behavior and will flag it. Note that this method does not address random respondents.

Our three flagged respondents had the following positional frequencies (compared with the expected number, used as our classification threshold). The total answered are out of the 96 questions that comprise the core of the study.

| Position 1 | Position 2 | Position 3 | Position 4 | Total Answered |

| 82 >= 57.829081 | 4 | 10 | 0 | 96 |

| 0 | 84 >= 64.422350 | 9 | 3 | 96 |

| 28 >= 24.508119 | 10 | 0 | 1 | 39 |

We calculated statistically significant breakoff for both question and position at counts above 1. We use the bootstrap to calculate confidence intervals and round the counts up to the nearest whole number. Due to the small sample size in comparison with the survey length, these particular results should be viewed cautiously:

| Position | Count |

| 40 | 2 |

| 44 | 2 |

| 49 | 3 |

| 66 | 2 |

| 97 | 20 |

| Wording Instance (Question) |

Suffix | Count | |

| 'marine' | 'thon' | 2 | |

| 'china' | 'thon' | 2 | |

| 'drama' | 'thon' | 2 | |

| 'estate' | 'thon' | 2 | |

| 'antidote' | 'thon' | 4 | |

| 'office' | 'licious' | 2 | |

| 'eleven' | 'thon' | 2 | 'affidavit' | 'licious' | 2 |

Two features jump out at us for the positional breakoff table. We clearly have significant breakoff at question 98 (index 97). Recall that we have 96 questions in the central block, two questions in the first block, and one question in the last block. Clearly a large number of people are submitting responses without feedback. The other feature we'd like to note is how there is some clustering in the 40s - this might indicate that there is a subpopulation who does not want to risk nonpayment due to our pricing scheme and has determined that breakoff is optimal at this point. Since we do not advertise the number of questions or the amount we will pay for bonuses, respondents must decide whether the risk of not knowing the level of compensation is worth continuing.

Like Cramer's $$V$$, we flag cases where Spearman's $$\rho$$ are greater than 0.5. We do not typically have sufficient data to perform hypothesis testing on whether or not there is a correlation, so we flag all found strong correlations.

The correlation results from this run of the survey were not good. We had 6 schwa-final words and 6 vowel-final words. There are 15 unique comparisons for each set. Only 5 pairs of schwa-final words and 3 pairs of vowel-final were found to be correlated in the -thon responses. 9 pairs of schwa-final words and 1 pair of vowel-final words were found to be correlated in the -licious responses. If we raised the correlation threshold to 0.7, none of the schwa-final pairs were flagged and only 1 of the vowel-final pairs was flagged in each case. Seven additional pairs were flagged as correlated for -thon and 3 additional pairs were flagged for -licious.

Third Run

The third run of the survey used no qualifications. We had hoped to attract bots with this run of the survey. Recall that in the previous survey we filtered respondents using AMT Qualifications. Our hypothesis was that bots would submit results immediately.

This run of the survey was the first in this series to be launched during the work day (EST). We obtained 148 total responses, of which 129 were unique. 113 unique respondents claimed to be native English speakers. Of these, we classified 8 as bad actors.

| Position 1 | Position 2 | Position 3 | Position 4 | Total Answered |

| 23 >= 21.104563 | 41 | 31 | 1 | 96 |

| 0 | 3 | 51 >= 40.656844 | 38 >= 30.456324 | 92 |

| 25 >= 22.476329 | 39 | 31 | 1 | 96 |

| 29 | 67 >= 66.209126 | 96 | ||

| 6 | 12 | 43 >= 41.282716 | 21 | 82 |

| 0 | 4 | 42 >= 35.604696 | 50 >= 38.141304 | 96 |

| 6 | 28 | 32 | 30 >= 29.150767 | 96 |

| 25 | 0 | 3 | 68 >= 64.422350 | 96 |

Clearly there are some responses that are just barely past the threshold for adversary detection ; the classification scheme we use is conservative.

Interestingly, we did not get the behavior we were courting for breakoff. Only the penultimate index had statistically significant breakoff; 51 respondents did not provide written feedback. We found 9 words with statistically significant breakoff at abandonment counts greater than 2 (they all had counts of 3 or 4). The only words to overlap with the previous run were "estate" and "antidote". The endings for both words differed between runs.

As in the previous run, only 5 pairs of schwa-final words plus -thon had correlations above 0.5. Fewer vowel-final pairs (2, as opposed to 3) plus -thon were considered correlated. For the -licious suffix, 10 out of 15 pairs of schwa-final words had significant correlation, compared with 9 out of 15 in the previous run. As in the previous run, only 1 pair of vowel-final words plus -licious had a correlation coefficient above 0.5. This results do not differ considerably from the previous run.

Fourth Run

This run was executed close to midnight EST on a Friday. Of the 157 respondents, 98 reported being native English speakers. We found 83 responses that were not classified as adversaries. Below are the 15 bad actors' responses:

| Position 1 | Position 2 | Position 3 | Position 4 | Total Answered |

| 65 >= 64.422350 | 3 | 0 | 28 | 96 |

| 29 >= 25.847473 | 2 | 0 | 2 | 33 |

| 0 | 5 | 87 >= 63.825733 | 4 | 96 |

| 9 | 18 | 19 | 37 >= 35.604696 | 83 |

| 13 | 2 | 18 | 52 >= 47.483392 | 85 |

| 53 >= 40.029801 | 2 | 1 | 0 | 56 |

| 96 >= 66.209126 | 0 | 96 | ||

| 3 | 12 | 40 >= 39.401554 | 41 >= 34.327636 | 96 |

| 1 | 3 | 5 | 17 >= 16.884783 | 26 |

| 3 | 1 | 1 | 91 >= 65.018449 | 96 |

| 36 >= 33.044204 | 42 >= 40.656844 | 12 | 6 | 96 |

| 6 | 32 >= 29.804578 | 5 | 0 | 43 |

| 35 >= 30.456324 | 41 | 17 | 3 | 96 |

| 20 >= 19.716955 | 18 | 11 | 2 | 51 |

| 0 | 0 | 5 | 91 >= 63.228589 | 96 |

For the -thon pairs, 1 out of the 15 schwa correlations was correctly detected. None of the vowel correlations correctly were detected. For -licious, 2 schwa pair correlations were correctly detected and 4 vowel pair correlations where correctly detected.

For this survey we calculated statistically significant breakoff for individual questions when their counts were above 2 and for positions when their counts were above 1. The penultimate question had 38 instances of breakoff. Fourteen questions had breakoff. The maximum cases were 4 counts each for "cayenne" and "hero" for the suffix -licious.

Entropy Comparison

We observed the following entropies over the 96 questions of interest. Note that the maximum entropy for this section of the survey is 192.

| Instance | Initial Entropy | Entropy after removing adversaries |

| Fourth Run | 186.51791933021704 | 183.64368029571745 |

| Third Run | 173.14288208850118 | 169.58411284759356 |

| Second Run | 172.68764654915321 | 169.15343722609836 |

Notes

Due to a bug in the initial analysis in the last survey (we changed formats between the third and the fourth), the first run of the analysis did not filter out any non-native English speakers and ran the analysis on 137 respondents. There were 20 adversaries calculated in total and only a handful of correlations detected. The entropy before filtering was 190.0 and after, 188.0. We also counted higher thresholds for breakoff. We believe this illustrates the impact of a few bad actors on the whole analysis.

Note that we compute breakoff using the last question answered. A respondent cannot submit results without selecting some option; without doing this, the "Submit Early" button generally will not appear. However, for the first three runs of the survey, we supplied custom Javascript to allow users to submit without writing in the the text box for the last question.

[Read More]