Repost: Tries for typeclass lookup

Over at ezyang's research log, Edward reports on SPJ's question of whether tries would improve typeclass lookup. There are no comments yet, but it would be nice to see some discussion!

[Read More]The one where I'm a Debbie Downer

I've spent the month of October ironing out some nasty kinks in my internship and prepping for my OOPSLA talk, so I'm pretty behind on blog posts (what else is new). This post isn't me finishing up anything I'd started earlier in the season. Instead, it's a quick look at a study I saw in my Google Scholar recommendations.

The title of the work is

Does the Sun Revolve Around the Earth? A Comparison between the General Public and On-line Survey Respondents in Basic Scientific Knowledge

The conclusions are heartening -- the authors find (a) AMT workers are significantly more scientifically literate than their general population peers and (b) even with post-stratification, the observed respondents fare better. The point of the survey was an assessment of the generalizability of conclusions drawn from AMT samples. The authors note that past replications of published psychological studies were based on convenience samples anyway, so the bar for replication may have been lower. Polling that requires probability sampling is another animal.*

The authors describe their method; I'm writing my comments inline:

Using a standard Mechanical Turk survey template, we published a HIT (Human Intelligence Task) titled "Thirteen Question Quiz" with the short description "Answer thirteen short multiple choice questions." The HIT was limited to respondents in the United States over the age of 18, to those that have a HIT Approval Rate greater than or equal to 95%, and to those that

have 50 or more previously approved HITs.

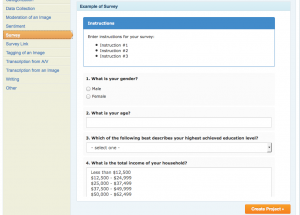

Here is a screenshot of the standard AMT survey template:

We can safely assume that (a) the entire contents of the survey was displayed at once and (b) there was no randomization.

With Turker filters, the quality of the respondents was probably pretty good. The title conveys that the survey is easy. If they didn't use the keyword survey, they probably attracted a broader cohort than typical surveys.

I did a quick search with the query "mechanical turk thirteen question quiz" and came up with these two hits, which basically just describe the quiz as taking <2mins to complete and being super easy fast cash (didn't see anything on TurkerNation or other forums). Speaking of fast cash, the methods section states:

Respondents were paid $0.50 regardless of how they performed on the survey.

Well, there you go.

The authors continue:

Shown in Table 1 are the thirteen questions asked of each AMT respondent. The questions numbered 1-7 relate to scientific literacy (Miller, 1983, 1998). The questions numbered 8-10 provide basic demographic information (gender, age, and education). Interlaced within these ten questions are three simple control questions, which are used to ensure that the respondent reads each question. We published a total of 1037 HITs each of which were completed.

I'm going to interject here and say I think they meant that they posted 1 HIT having 1,037 assignments. Two things make me say this: (1) the authors did not mention anything about repeaters. If they posted 1,037 HITs, they should have problems with repeaters (unless they used the newly implemented NotIn qualification, which essentially implement's AutoMan's exclusion algorithm) and (2) since the authors say they used a default template, that means they constructed the survey using AMT's web interface, rather than constructing it programmatically. It would be difficult to construct 1,037 HITs manually.

The total sample size was decided upon before publishing the HITs, and determined as a number large enough to warrant comparison with the GSS sample.

That sounds fair.

Of the completed HITs, 23 (2.2%) were excluded because the respondent either failed to answer all of the questions, or incorrectly answered one or more of the simple control questions.

There were three control questions in total. The probability of a random respondent answering all three correctly is 0.125, which is not small. Conversely, the control questions may not have been as clear as the authors thought (more on this later).

Continuing:

In half of the HITs, Question 3 was accompanied by a simple illustration of each option (Earth around Sun

and Sun around Earth), to ensure that any incorrect responses were not due to confusion caused by the wording of the question, which was identical to the GSS ballot wordings.

First off, the "half of the HITs" not makes me think that they had two hits, each assigned approximately one half of the 1,037 responents.

Secondly, it's good to know that the wording of the experimental questions was identical to the comparison survey. I am assuming that the comparison survey did not have control questions, since this would be far less of a concern for a telephone survey. However, I don't know and am postponing verifying that in an attempt to actually finish this blog post. :)

However, the illustration did not affect the response accuracy, so we report combined results for both survey versions throughout. A spreadsheet of the survey results are included as Supplemental Material.

There is not a supplemental material section on this version of the paper (dated Oct. 9, 2014 and currently in press for the "Public Understanding of Science" journal). I checked both author's webpages and there was not a supplemental material link anywhere obvious. I suppose we'll have to wait for the article (or email them directly).

Now for the survey questions themselves and the heart of my criticism:

[table id=7 /]

You can see essentially this table in the paper. I added the last column, since I couldn't get the formatting in the table to work. I have two main observations about the questions:

- The wording of the control questions isn't super clear. Strawberries are green for most of their "lives" and only turn red upon maturing. Also, a five hour day could mean five hours of sunlight, a five hour work-day, or some other ambiguous unit of time.

- More importantly though, the design contains ONE WEIRD FLAW -- A respondent could "Christmas tree" the response and get all three control questions correct! (Although "only" four out of the seven real questions would be correct.) This makes me wonder what effect randomization would have on the total survey.

While I'd love to believe that Americans are more scientifically literate than what's generally reported (or even believe that AMT workers are more scientifically literate), I do see some flaws in the study and would like to see a follow-up study for verification.

*While traditionally true, the domain of non-representative polls is currently hot stuff. I've only seen Gelman & Microsoft's work on XBox polls, which used post-stratification and hierarchical models to estimate missing data. As far as I understand it (and I'm not claiming to understand it well), this work relies on two major domains of existing knowledge about the unobserved variables (1) the proportion of the unobserved strata and (2) past behavior/opinions/some kind of prior on that cohort. So, if you're gathering data via XBox for election polling and as a consequence have very little data on what elderly women think, you can use your knowledge about their representation in the current population, along with past knowledge about how they respond to certain variables, to predict how they will respond in this poll. If you are collecting data on a totally new domain, this method won't work (unless you have a reliable proxy). I suspect it also only works in cases where you suspect there to be reasonable stability in a population's responses -- if there is a totally new question in your poll that is unlike past questions and could cause your respondents to radically shift their preferences, you probably couldn't use this technique. I think a chunk of AMT work falls into this category, so representative sampling is a legitimate concern.

What happened to Mark Brendanawicz?

Mark Brendanawicz was a character on NBC's Parks and Recreation for seasons 1 and 2. Before I get into any detail, I wanted to settle something...

What is up with that surname?

I don't know a ton about Polish names (Leslie refers to Mark as Polish in season one), but "Brendanawicz" sounds like a made-up name, like someone was making fun of a guy named Brendan at a party, forgot his last name, and ended up referring to him as "Brendan Brendanawicz." I found a website where you can check whether a name is in the top 150,000 names in the 2000 census. Here's what I get back on Brendanawicz:

As a point of comparison, I queried the surname of a long lost friend from high school:

That's only about 30,000 from the bottom! And now someone I knew at Brandeis:

This is clearly not scientific, nor is it even pretending to be conclusive. Anyway, I guess appearing in the top 150,000 surnames is a bit of a wash. I still think it doesn't sound real.

The real point of this post

Mark begins the show as kind of a playboy and a bit of an ass. When he begins dating Ann, he starts to act less selfish and actually comes across as a good guy. In fact, he just keeps hitting home runs in the good guy department over the course of season two. Here are some reasons why Mark actually turns out to be a good guy:

- Rather than behaving territorially, he shows compassion toward Andy (Ann's ex), who still hangs around, expecting Ann to take him back.

- Unlike Andy, Mark understands that Ann is not a prize, but a person. He doesn't ever try to assert control over her, and he never stoops to the sitcom stereotype.

- Rather than purchasing something useless and typical for Ann's birthday, he buys her a computer case. She had mentioned many weeks before her birthday that she needed one. He listened to what she was saying, wrote it down, and waited to surprise her with one.

- He makes fun of himself. On Valentine's day, he dresses up and enacts all of the cliches, giving Ann a Teddy bear, chocolates, roses, etc., because he's never been in a relationship with someone on Valentine's day. Ann is not dressed up, she's hanging out on the couch, and she's just enjoying his company.

- When Ann fails to introduce Mark as her boyfriend to a childhood friend she's always had a crush on, Mark doesn't get jealous, confront her with it, or otherwise act super dramatic. He has ever right to be hurt -- it was a glaring omission, and her feelings for this friend are obvious. However, he deals with it with grace, even going so far as to talk to Andy about it.

What I appreciated about Mark was that he was a good guy who, despite never having been in a serious relationship before, knew enough about human interaction to treat Ann like a person, and not just TV Girlfriend Stereotype. They had one of the healthiest, most respectful relationships I've ever seen in t.v. Sure, the way the writers began their relationship was kind of shitty, but somehow Ann and Mark worked.

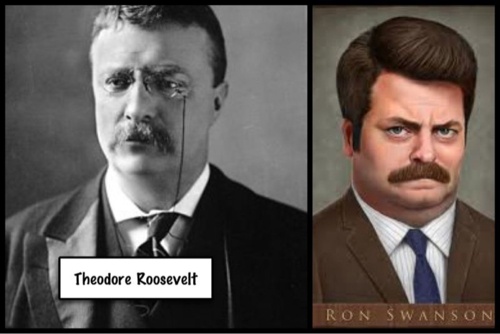

The way they portrayed Mark contrasted with Tom Haverford, who thinks he's starring in Entourage (a show I've never seen), or Ron Swanson, the manliest man or something. Tom hits on women so aggressively, but with such little tact and appeal, that he's turned himself into a joke (a joke who should also be disciplined for sexual harassment on a regular basis). Yeah, his character is funny, but what he does is NOT OKAY. Ron Swanson is Teddy Roosevelt, constantly performing masculinity. He's also quite entertaining, but definitely a caricature.

I very much enjoy watching both Tom and Ron, but having Mark balance them out is a rare treat. You just don't see men behave with such grace, maturity, and self-respect on t.v., without being...well, Captain Adama.

[caption width="500" align="alignnone"] Are all the admirable men on T.V. admirals?[/caption]

Are all the admirable men on T.V. admirals?[/caption]

Formal Methods in Practice

Emery recently sent me a link to an experience report of a collection of engineers at Amazon who used formal methods to debug the designs of AWS systems. It's pretty light on specifics, but they do report some bugs that model checking found and discuss their use of tools. They describe why they turned to formal methods in the first place (hint: it wasn't a love of abstract algebra and proof by induction) and how they pitched formal methods as their solution to a variety of problems at Amazon.

SAT solvers vs...Something Else

The report begins by noting that AWS is massive. As a result, statistically rare events occur with much higher frequency than the [human] users' gut notion of rare events. The first anecdote describes how one of the authors found some issues in "several" of the distributed systems he either designed or reviewed for Amazon. Although they all had extensive testing to ensure that the executed code would comply with the design, the author realized that these tests were inadequate if the design was wrong. Since he was aware that there were problems with edge cases in the design, he turned to formal methods to ensure that the system was actually exhibiting the behaviors he expected.

His first attempt at proving correctness of these systems was to use the Alloy specification language. Alloy's model checking is powered by a SAT solver. At a high level, SAT solvers model many states of the world (i.e., a program or system) as boolean propositions. If we wanted to talk about a distributed system, we might have propositions like MasterServerLive or PacketSent. These propositions have true or false values. Nothing that isn't explicitly defined can be described.

If you are familiar with propositional and predicated logic, this should all be trivial! However, if you're not, you might be wondering what we mean by "nothing that isn't explicitly defined can be described." Consider this: I have 10 computers from 1995 in my basement. I split them into two clusters. Each has one queen and four drones (1). I'd like to state some property foo about each queen. Since I have two queens, I would have two propositions: FooQueen1 and FooQueen2. If I never need to use either of these propositions separately (that is, if they always appear in expressions looking like this: $$FooQueen1 \wedge FooQueen2$$), then I can just define one proposition, $$FooQueen$$. If, however, I want to say something like "foo is true for at least one of the queens," the I would need each of FooQueen1 and FooQueen2.

Every time I want to assert some property of these two queens, I will have to create two propositions to express them. Furthermore, if I want to say something we'll call about a relationship bar between a queen and each of its drones, I will have create variables BarQueen1Drone1, BarQueen1Drone2, ..., BarQueen2Drone4. Any relationship or property that isn't described in this way cannot be reasoned about.

Anyone who has experience with first order logic might point out that there is a much more elegant solution to propositional logic -- we could always just describe these properties as predicates over a universe. Instead of defining two propositions FooQueen1 and FooQueen2, we could say $$\forall x \in \lbrace computers \rbrace: Queen(x) \rightarrow Foo(x)$$, which states that if one of my basement computers is a queen, then foo holds. This expression gives us the possibility of adding another queen to the system without having to explicitly add more propositions.

So far so good, right? Well, there's a another problem with using SAT solvers for distributed systems -- timing information matters. Some processes are redundant, and we need a way to describe concurrency. We could try to define predicates like Before and Concurrently, but again our model explodes in the number of "things" we need to specify. Tools like Alloy have no notion of timing information -- we have to tell it what temporal ordering means.

The author found that Alloy simply was not expressive enough to describe the kinds of concurrency invariants he needed. He instead started using TLA+, a modelling language based on temporal logic, with logical "actions" (I think I learned about this in a class I took on logic in NLP, but I don't recall much about it and the Wikipedia page is empty). This language was expressive enough to describe the systems he wanted to model. Others at Amazon independently came to the same conclusion. Some found a language on top of TLA+, called PlusCal more helpful.

Prejudice

There seems to be a lot of suspicion (if not prejudice) in the "real world" of formal methods. The authors decided to market their approaches as "debugging designs," rather than "proving correctness." This part was the real hook for me. I'll admit, formally proving things can be quite satisfying. Proving correctness or invariance can feel worthy in their own right. Unfortunately, not all problems may be worthy of this effort.

A major problem in the adoption of these tools is that they aren't marketed to the right people in the right way. Pitching formal methods as a way to "debug designs" has a lot of appeal to pragmatists -- it emphasizes the idea that designs themselves may be flawed, and these flaws may be difficult to find. The authors cite medical devices and avionics as fields that value correctness, because the cost of bugs is very high. However, in most software systems, the cost is much lower. A bug that hasn't been exposed in testing is probably quite infrequent. Its infrequency means that the cost of paying someone to ensure a program's correctness outweighs the cost of the consumer's exposure to the bug. What the authors realized, and what they pitched in this paper, was the idea that an event that only occurs 1 out of a billion executions is no longer a rare event in real software systems. There is a mismatch between the scale at which we test and develop and the scale at which we run production code. We may not be able to extrapolate that a program is correct or a system robust to failure on the basis of testing that are orders of magnitude below actual load.

How this relates to me

Our pitch for SurveyMan and related tools is similar to the authors' pitch for using formal methods for large systems. We're starting from the assumption that the design of the survey (or experiment) may be flawed in some way. Rather than deploying costly studies, only to find out months or even years later that there were critical flaws, use formal methods instead. Our tools don't look like formal methods, but they are. We construct a model for the survey on the basis of its structure and a few invariants. We use this data to statically analyze and help debug the results of a survey. The author's model checking and our random respondent simulations are both used to check assumptions about the behavior of the system.

Footnotes

- Technically, the "proper" terminology here is master/slave, but that has an inhumane, insensitive ick factor to it, so I've decided to start calling it a queen/drone architecture instead.

When the needs of the few outweigh the needs of the many

I'm not posting much these days, since I'm currently in California on an internship. I have some posts I'd like to make on notes from the summer, but posts will likely being sparse for the next few months.

However, I'm up thinking about something and thought I'd blog about it.

The facts

A few days ago I received an email from a TA at Prestigious Institution X, asking me to take down my Software Foundations github repository because students were allegedly taking too much "inspiration" from it. My repository contains solutions to a chunk of the textbook, painstakingly solved mostly by me, with some input from Cibele Freire, logician. Posting solutions to a textbook released under the MIT license is, I believe, quite legal. Using those solutions to cheat in a class, however, is typically against an academic institution's honor code. Since there is no course even remotely similar to this at UMass (and in fact, few other resources exist to bootstrap a self-study plan for learning Coq and formal verification), I do not believe I am in violation of UMass' code of student conduct. The email implied none of these things, but I wanted to make sure I wasn't doing anything illegal or malicious in ignorance.

The academic world is a small one, so I consulted with my advisor. He suggested that I email the folks teaching the class, explain my reasons for having a public repo, and offer to make the repository private. The response to my email was indeed a request to make the repository private. I'm putting the previous link to the repository in this blog post, just in case someone comes searching for it and Google happens to index this post:

http://github.com/etosch/software_foundations

If you would like access, please email me directly. I am happy to discuss problems and give people access when it is appropriate to do so. There are plenty of exercises I have not completed, but would like to. I expect anyone who is interested enough to email me to abide by their school's honor code, if applicable.

The principle of the thing

Me taking down my repository does not dissuade cheating. It just buries cheating a little deeper. Dishonesty and plagiarism are pandemic in computer science. This shocked me when I started working in CS. In the humanities, the standards for attribution are quite explicit and stringently applied. Students must routinely attend lectures on academic honesty. I have never seen seen anything even remotely similar in computer science.

Removing the repository from public view shifts the blame from the student plagiarist to the provider of information. At one point in my life, when I thought I would go to grad school for English Literature, I made writing samples publicly available. Would I be culpable for someone plagiarizing my work simply because I wished to promote my work? No one would ask Harold Bloom to stop publishing if a student plagiarized him in a Shakespeare class. Obviously I'm no Harold Bloom.

The perpetrator (plagiarist) here is the one in the wrong.

It's a bit difficult for me to not be personally a little upset/frustrated by this. Not only does it devalue work in our field, but there's something maddening about students at Prestigious University X ruining it for the rest of us. I doubt they think that way, and I doubt anyone would ever put it to them that way. One of the earliest courses I took in computer science was SICP. It was not taught well. Coming from the humanities, where you actually do the reading you're assigned, I read the text. I also did nearly all of the exercises up to the chapter on metacircular evaluation. This self-study was extremely important to my education. When I got stuck on a problem I couldn't solve, I searched for the answer on the internet. Seeing solutions from people who were better programmers than I was made me a better programmer.

Eventually someone else will post solutions and it won't matter much that I took mine down. My solutions are still cached out there and I know some rando forked my repository. A quick search of github for "software foundations" and Coq returns about 80 results and at least one looks fairly complete.